Reporting automation workflow

Upd:

Table of Contents

Introduction

I work for a retail company and one of the consequences of this is IT not being a core competency of the organization. This is driven by a variety of factors—from internal customer demand to the sort of talent the organization attracts to where budget money gets allocated—but the net impact is an IT infrastructure and tooling that can be extremely limiting. This is in stark contrast with my peers, many of whom work in tech directly or in closely related fields, for whom provisioning a new VM is as simple as clicking a few buttons on an internal tool.

As an analyst, my work involves a lot of data wrangling. Most of this can and should be automated, but our IT limitations can make this a challenge. Figuring out how to automate with the available tools, which usually don't offer APIs—and if they have reporting at all it's to PDF or RTF documents formatted for printing and faxing (and can't be scheduled), has been a challenge.

These limitations have offered opportunities to get creative. This post outlines some of the convoluted processes I use to automate reporting with constraints on data retrieval, data processing, and report distribution.

Overview

The final product of most of my reporting is an email with some summary information in the body and an Excel spreadsheet attached.1 The data wrangling and report generation process usually looks like this:

- Collect - This may be via emailed reports, web scrapers, APIs, SFTP uploads, or manually triggered.

- Clean up and normalize - Ingest the collected data, clean it up, normalize the information to a standard (e.g., use usernames rather than employee IDs or system IDs).

- Transform and summarize - Take the raw data and transform it to get whatever metrics and information we're looking for in the report. Summarize the transformed data along various groupings and time periods (e.g., by department, by team, by month, by week, etc.). Identify outliers and trends.

- Generate the spreadsheet - Organize and format the data into a spreadsheet. Add conditional formatting, charts, etc.

- Send emails - Different emails based on difference slices (e.g., associate, team, department). Include some summary information in the email body of the report highlights.

1. Collect the data

This step is much easier in an organization with a centralized data warehouse, APIs to access it, and comprehensive data coverage. Lacking all of the above, I make do with whatever data tools I can cobble together, which varies a lot depending on where I'm getting the data.

Scheduled emails (usually CSVs)

'Simple VBA script to save an email's attachments to a specific folder. Run

'this as a mail rule on messages you want to save attachments from.

Public Sub saveAttachtoDisk(itm As Outlook.MailItem)

Dim objAtt As Outlook.Attachment

Dim saveFolder As String

saveFolder = "C:\Users\ryan\Nextcloud\Reports\Incoming"

For Each objAtt In itm.Attachments

objAtt.SaveAsFile saveFolder & "\" & objAtt.DisplayName

Set objAtt = Nothing

Next

End SubSave attachments to a folder and rename them (StackOverflow)

The biggest obstacle here is having to have something running to collect the attachments. With Exchange and lack of IT support at work, this means having a laptop connected to VPN with Outlook open running a VBA script to download attachments. Not optimal. Outlook likes to throw a modal warning about saving open attachments sometimes, which blocks all rule execution until it's dismissed. Data flexibility also suffers, since the period is fixed for each generated report. If your data isn't static until a certain point (e.g., while contacts are still being worked on from the previous day), you don't get the freshest data.

SFTP uploads

Same drawbacks as the emailed reports, but minus the dependency on Outlook. It requires more help from IT, though, to have an SFTP server available. In my experience, fewer platforms support this than email.

One approach here is to watch the upload folder with

incron

for inotify events (e.g., IN_CLOSE_WRITE) and execute a script on the newly uploaded file. If you're uploading many different report files to the same directory, then using a bash script to sort out which report script to run on it may be helpful.

Web scraping

When a real data export is not available, often information can be scraped via a web interface. This involves either actual browser automation like Selenium or a crawler to download pages and process the HTML (e.g, Beautiful Soup, Scrapy). Selenium is well documented and everybody uses it, so you won't usually have problems figuring out how to implement things. If your web interface isn't completely user-hostile, Scrapy is good for pulling down a lot of data quickly.

The worst I've had to work with was getting timecards from Kronos, as the DOM is constantly being modified by JavaScript, they have never heard of the semantic web (so element selection is nearly impossible), and state is all kept server-side (so you can't just navigate to a URL, you have to actually click through to the destination as part of the scraping process and none of it can be parallelized). If you don't want the process to take 3 hours by relying on sleeps to try to hit the 99th percentile for the DOM to settle, you have to get fancy in your Selenium waits (e.g., if this block of red status text appears, disappears, appears, and disappears, and this child element of this child element of [...] this class is visible, then the page is done loading). Even then that only gets you down to about a 20-minute run time.

A friendlier web interface will give you sensible URLs that allow you to access information directly. For example, if you're trying to grab associate schedules from a workforce management platform, being able to pull up a specific time period for a specific associate with the user ID and date allows you to grab a bunch of pages at once and parse the HTML into usable data.

APIs

DEBUG:__main__:Getting data from 2020-03-02 to 2020-11-14...

DEBUG:__main__:Processing 19 chunks for date range...

DEBUG:__main__:Getting chunk 1 from 3/2/2020+4:00:00+AM to 3/16/2020+3:59:59+AM...

DEBUG:__main__:Getting chunk 4 from 4/13/2020+4:00:00+AM to 4/27/2020+3:59:59+AM...

DEBUG:__main__:Getting chunk 3 from 3/30/2020+4:00:00+AM to 4/13/2020+3:59:59+AM...

DEBUG:__main__:Getting chunk 5 from 4/27/2020+4:00:00+AM to 5/11/2020+3:59:59+AM...

DEBUG:__main__:Getting chunk 2 from 3/16/2020+4:00:00+AM to 3/30/2020+3:59:59+AM...

DEBUG:__main__:Took 8.64 seconds to get 51 lines for chunk 1.

DEBUG:__main__:Getting chunk 6 from 5/11/2020+4:00:00+AM to 5/25/2020+3:59:59+AM...

DEBUG:__main__:Took 10.28 seconds to get 6852 lines for chunk 2.

DEBUG:__main__:Getting chunk 7 from 5/25/2020+4:00:00+AM to 6/8/2020+3:59:59+AM...Ideally everything would offer this. You don't have to store data locally, you get access to the most current data, and you can consume several different sources relatively easily. It also means running the reporting script and getting the data is all one step. Not having to store data locally is particularly useful when you want several people to be able to run reporting without maintaining their own data stores or fumbling around with sqlite databases on a shared drive.2 Get them an API key and they're good to go.

Quality can vary significantly, though, depending on the competency and focus of a given vendor. The initial time investment to set up API handling can be high, too. The API will need to be authenticated against and appropriate methods for accessing and understanding available data have to be figured out. Documentation quality varies with API quality. If you're fetching large amounts of data, client-side logic may be required to break the request up into smaller pieces and send parallel requests. API queries will be slower than local database access if you're pulling a large amount of raw data through the API and not relying on any server-side summarization.

Manually

Some platforms don't offer any way to automate the report generation and you'll have to manually go click the buttons to download a report. A tool like Hazel or Maid can save you the steps of having to rename and sort the report file once downloaded (and trigger the reporting script as part of those steps). You can potentially use the web scraping approach (Selenium) to automate the button clicking, though.

2. Clean and normalize

Data consumed from different sources may have different identifiers, data formatting, or other issues. These discrepancies need to be conformed to a standard. This may involve cross-referencing certain data (e.g., a platform-specific user ID to the associate's Active Directory username) or normalizing time zones. Different data channels on the same platform may offer different identifiers (e.g., one report gives full name, another gives agent ID). I maintain a local cross-reference CSV for matching all of these up.

Python with the Pandas library is probably the best approach for most people. R and Julia are also worth looking into, but have a higher skill floor than Python. Python has extremely comprehensive catalogue of libraries, so for almost any export or integration there will likely be something pre-built for you to lean on.

Local caching

It may be worthwhile to maintain a local database of the API request results, especially if you're pulling in a large amount of raw data (e.g., individual contacts over a year period) that you don't expect to change over time. SQLite is easy if you just want a file database and don't need to share it with a lot of users. Some providers will only store raw data like this for a limited period of time, so it may also be useful for archival purposes.

Caching requests across script runs can also be helpful, especially when you're testing. Stashing the data to a CSV (easy to understand) or a Python Pickle file (faster) and checking

os.path.getmtime

for the last modification time as a basic TTL cache may speed up your development work a lot if you're not waiting on API requests.

3. Transform and summarize

Once the data has been cleaned up, it needs to be transformed to get the information needed by the report. For example, for a report on contacts that were not tagged with a contact reason that's generated from a list of raw contacts, the contact data must be filtered to match those that meet the criteria (e.g., longer than 10 seconds, not having a disposition like "Voicemail" or "Ghost", and without a contact reason tag).

Once the desired data is identified, then it can be summarized. In looking at contacts without a tagged contact reason, the report looks at contacts missing reason tags as a percentage of all contacts handled as well as contacts missing notes. This is sliced by department, by team, and by associate by year, by month, by week, and by day.

4. Generate the spreadsheet

Good, bad, or indifferent Excel is everywhere and will be the most accessible way to present dat that can be consumed tolerably by both people and machines. Most languages offer libraries for writing xlsx files. Many of the dynamic Excel features, like PivotTables and PivotCharts, are difficult to replicate with the xlsx export libraries typically used with Pandas. Unfortunately, this limits the amount of slicing and dicing your report consumers can easily do on their own without additional steps.

It's important to figure out a good approach to how you want to both slice and present the data to your users. I usually include a summary sheet with the highlights (e.g. a department-level summary; trends over the day, week, month, year; a summary chart, etc.), then break it down by the time period or team in separate sheets, then further by grouping within each sheet (e.g., overall, by team, by associate).

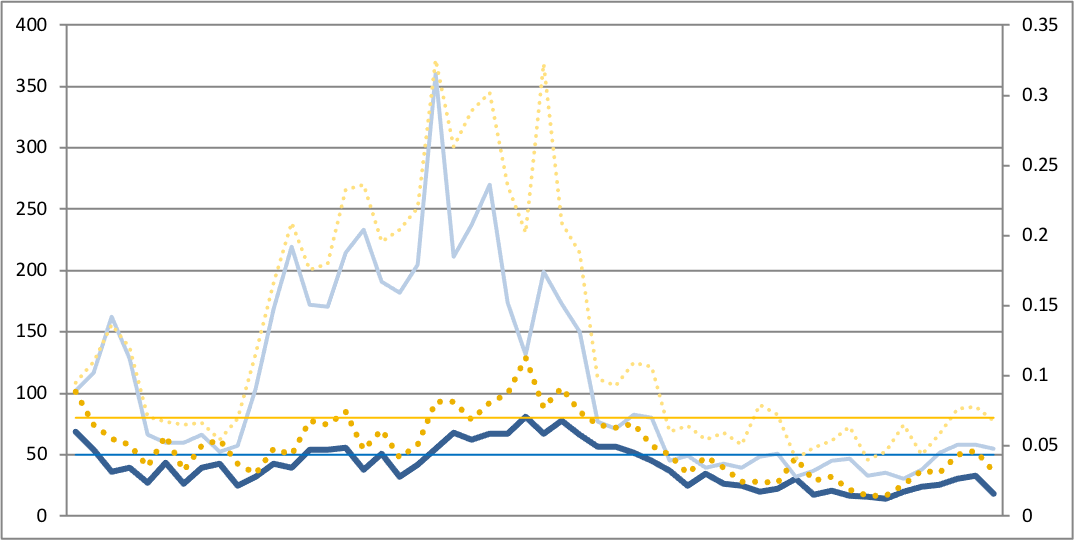

Charts are useful to help better visualize data. Conditional formatting can be used to emphasize how data falls along a range, identify outliers, or highlight metrics outside threshold.

If you're using Python, XlsxWriter is a good library for writing Excel spreadsheets and integrates well with Pandas. Defining features in the workbook can be fairly verbose, so this part of my scripts tend to take up a large portion of the total lines.

5. Send emails

Once the data is collected, collated, and prettified it needs to be distributed. Email isn't necessarily the ideal format, especially if you want a level of interactivity with your data, but it is pervasive and well-supported.

Automation is typically simple, especially if you have access to SMTP. Emails can be generated with both HTML, for pretty tables and graphs, and plaintext, to support a variety of clients and user preferences. Attach the generated spreadsheet from step 5 to the email.

Python's built-in SMTP library is pretty straightforward to use, but you'll need to construct the multipart MIME message manually. It's non-obvious if you're not familiar with the format, but there are some nice examples in the Python docs.

Data presentation

Recipients shouldn't be expected to open up an attached spreadsheet to access any report information, but you also don't want to overwhelm the email body with the full content of the report. A high level summary of the most relevant information and report highlights is ideal. For example, in a report of reasons customers contacted a contact center, include:

- The top-ten contact reasons by percentage for the report period, month, quarter, year, and last year.

- A weekly line graph of the top-five contact reasons for the year.

This way, the recipients can get a good impression of where the broad trends are quickly from opening the email without having to dive right to the spreadsheet.

Pandas styling can be used to apply basic conditional and number formatting to DataFrames that works with the HTML table generation.

Triggering scripts

Depending on how your data is being injested, there are a few different ways you can trigger the script to run:

- At a set time interval - For Linux cron, for macOS launchd, or for Windows Task Scheduler.

- On a file action - For Linux incron or for macOS Hazel.

- Received email - For Windows Outlook rules with a VBA script. You can also trigger on the file action after you save the attachment with the script from the beginning of the post.

Conclusion

We've collected our data, cleaned it up, generated summaries, created a spreadsheet, and emailed the report out to the team and done all of that with relatively little in the way of infrastructure. This isn't the Right Way to implement reporting automation, but given the constraints it's a fairly manageable and low-overhead way to do so.

Opportunities for improvement

Much of the above is built around specific constraints that are likely present in various flavors in many organizations, but in the absence of some or all of those constraints there are opportunities for improved processes and data presentation. Real business intelligence tooling likely obviates the need for almost all of the activities outlined above and the remaining efforts will usually take place within the BI platform, enabling things like real-time dashboards, interactive data visualization, notifications, and easier data synthesis.

As I said above, a marginal improvement to my situation would come with being able to provide an intranet site with interactive graphs, data presentation, and dashboards to make it easy to get realtime, specific, and on-demand access to the data.

-

In an ideal world, I'd deploy an intranet site with interactive dashboards and save the emails for when metrics are going out of range, but even a basic intranet site is beyond my ability to get supported. ↩

-

This is where a low-code mess like Access usually gets proposed by somebody on the business side, or unilaterally implemented, and everybody who has to use it spends the next five years wanting to set themselves on fire. Combine with a low-bandwidth-shared-drive-over-VPN situation and no local caching and the pain is truly awesome. ↩